Current Projects

Therabot™ - CHS: Large: Collaborative Research: Participatory Design and Evaluation of Socially Assistive Robots for Use in Mental Health Services in Clinics and Patient Homes

This project is funded by NSF Award #1900883 and includes REU supplements. The Therabot team is in the process of developing an adaptive socially assistive robot (SAR) platform by extending the features and capabilities of Therabot. Participatory design methods are being utilized to identify appropriate therapeutic interventions and evaluate their effectiveness, in order to address the needs of individuals diagnosed with depression. Therabot is an autonomous, responsive robotic therapy dog that has touch sensors, and the ability to record therapy sessions and instructions from a therapist for home therapy practice. It has sound localization to orient toward the user and responsive sounds to provide support. The project entails extensive mechanical design, electronics and sensors, and programming including artificial intelligence and machine learning. This project has provided many opportunities for undergraduate research projects. Visit the Therabot website here for more information.

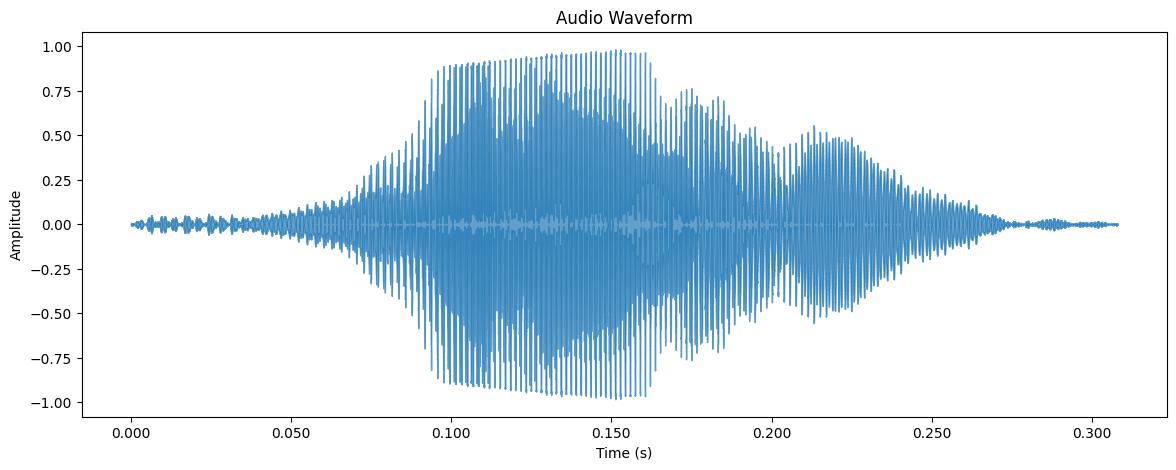

AudioLDM2 Pipeline - An Open Source Pipeline and Interface to Fine-Tune AudioLDM2 and Generate Audio

This project is open source and available in full at this link. The full documentation of the project is available at this link. It features a convenient interface to manipulate parameters, fine-tune AudioLDM2, and generate sounds from text prompts. It is recommended to deploy this project using the Docker container developed for use on the cloud server RunPod. This docket container is available at this link.

Past Projects

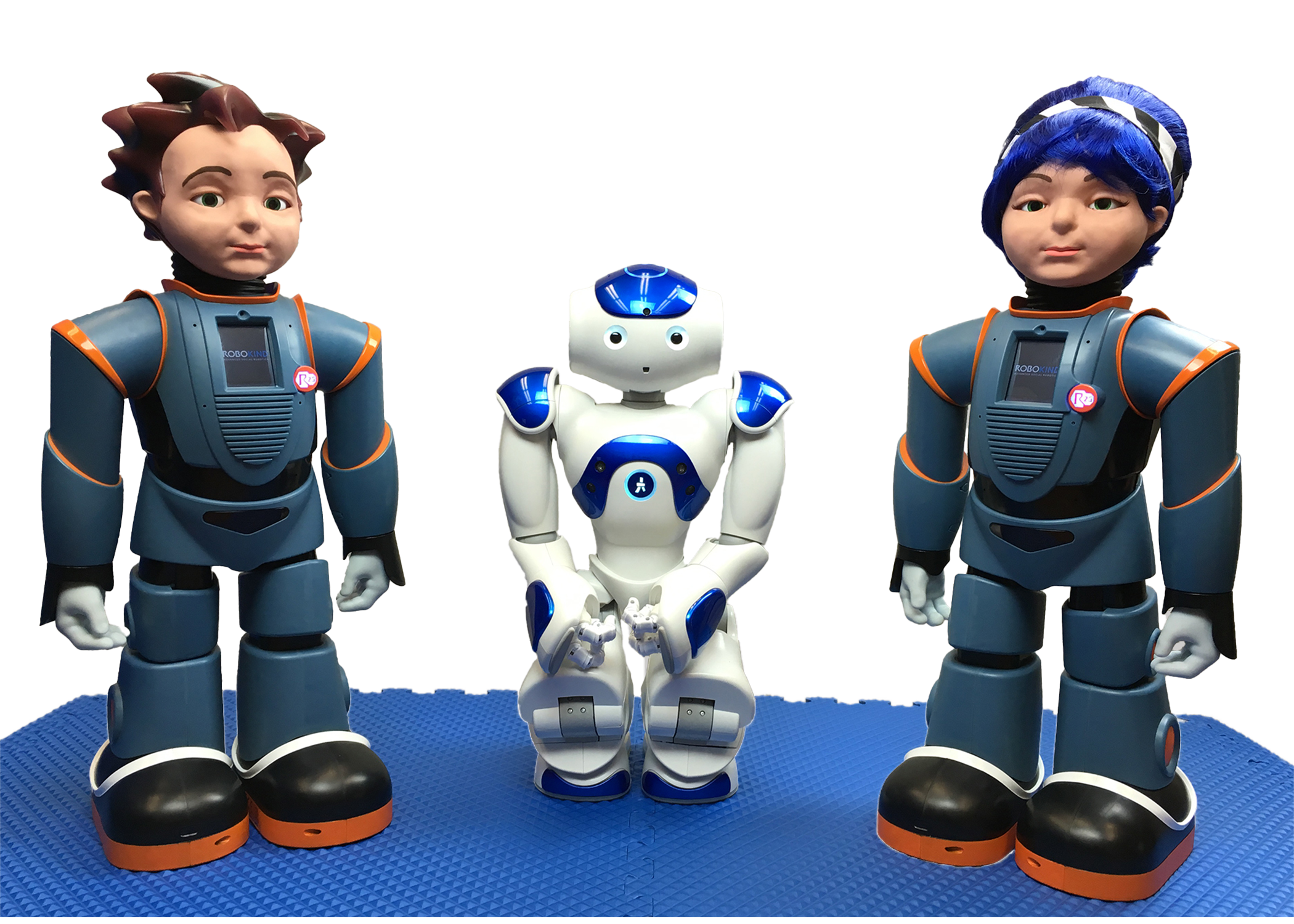

NSF: CHS: The Use of Robots as Intermediaries for Gathering Sensitive Information from Children

This project is funded through NSF Award #1408672 under the Cyber-Human Systems Division of Computer and Information Science and Engineering (CISE). The project has three primary aspects.

The Hybrid Cognitive Architecture aspect includes research into the research literature to form a literature review that is expected to be published as a journal article. The architecture includes expert knowledge from forensic interviews, along with sensory processing of the environment, and a decision-making process. Undergraduate and graduate students work on this project writing software that includes artificial intelligence, machine learning, algorithms, and other computational systems.

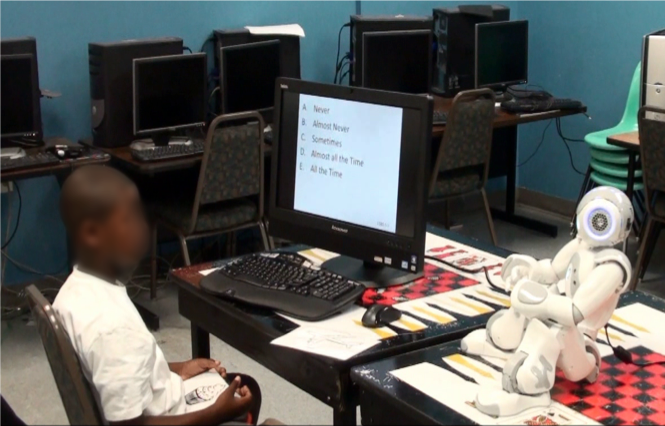

The Eyewitness Memory aspect of this project has a Nao robot or a human interview children about some incident they observed. The goal of the project is to determine whether children are more likely to accurately answer questions about their experiences when with a robot interviewer or a human interviewer. The children are between the ages of 8 and 12. The project gives undergraduates the opportunity to work on learning about collecting and analyzing data. There have been projects that included interface design for operating the robot through a Wizard-of-Oz approach. The students have also programmed the robot behaviors to enhance the human-robot interactions.

Additionally, there is the aspect of Gathering Information about the Experiences of Children with Bullying. This project is similar to the Eyewitness Memory aspect of the project, but in this set of studies, either the Nao robot or a human interview will ask students about their experiences with bullying in school. The children are between the ages of 8 and 12. The undergraduate students have served as interviewers, researchers, program the robot behaviors and responses, as well as develop the user interfaces.

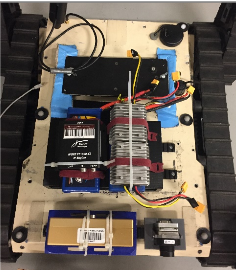

Hydra: A Modular, Universal Multisensor Data Collection System

The Sensor Analysis and Intelligence Laboratory (SAIL) lab at Mississippi State University’s Center for Advanced Vehicular Systems (CAVS) in collaboration with the Social, Therapeutic, and Robotic System (STaRS) Lab has designed and implemented a modular platform for automated sensor data collection and processing, named the Hydra. The Hydra is an open-source system (all artifacts and code are published to the research community), and it consists of a modular rigid mounting platform (sensors, processors, power supply and conditioning) that utilize Picatinny rails (typically used for rifles and scope mounts) as a rigid mounting system, a software platform utilizing the Robot Operating System (ROS) for data collection, and design packages (schematics, CAD drawings, etc.). The Hydra system streamlines sensor mounting so researchers can select sensors, put them together, and collect data.

Survivor Buddy

Survivor Buddy is a two-way communication device attached to a robotic arm that can be mounted to a mobile robotic base. It is intended for use in disaster areas like building collapses to reach people trapped under rubble and communicate both their position and condition. The project has been the focus of undergraduate research for the development of new interactive interfaces for controlling the robot. The Survivor Buddy has also been used in studies related to the development of vocal prosody in robotic speech. The latest efforts have been devoted to operating the different degrees of freedom of the robot through a mobile application using the accelerometer and gyroscope.

Robot Intent

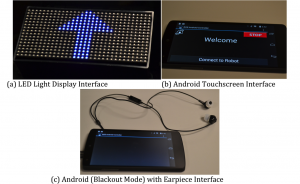

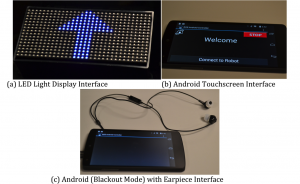

The Robot Intent project involved the development and testing three interfaces for communicating a robot’s intended movements to people operating near the robot. The three interfaces included a mobile Android device that displayed the next intended movement, an earpiece that gave audio messages of the next intended movement, and an LED light display that displayed directional arrows for the next intended directions in both visual and infrared LED displays. As a control condition for a study conducted the participants used a Logitech gamepad controller to manually operate the robot as is typical of robots operating with people in close proximity. This project involved designing and building the LED display, designing and programming the Android interface, and setting up the audio messaging system. This project was led by undergraduate researchers and resulted in a conference publication.

The other aspect of this project was the development of control interfaces to override autonomy based on intended messages through supervisory control of the robot. Undergraduate and graduate researchers created three interfaces for overriding a semi-autonomous robot’s behaviors. The three interfaces were a touchscreen Android mobile device to direct the movements of the robot, a voice command through a microphone attached to the Android mobile device, and the use of a Kinect to record directions for the robot to move through arm gestures. This resulted in a publication for the undergraduate and graduate researchers.

Both of these projects are in support of improving operations between a robot and a team of humans, such as tactical or search and rescue teams. A study was conducted for both intent and control interfaces using a maze in which the participants navigated a Turtlebot robot.

Vocal Prosody in Robotic Speech

Most HRI research concerning speech is investigating speech directed from human to robot. There has been relatively little research on the reverse communication channel: speech directed from robot to human. We are conducting experiments to determine if conveying emotion in robotic speech will increase the quality of human-robot interactions using the Survivor Buddy robot platform.